In the previous article – Build and distribute custom Image Mode for RHEL Containers – we created our application image and published it in Satellite. We now want to create servers based on this image. There are different ways to do this (see the official documentation from Red Hat Using image mode for RHEL to build, deploy, and manage operating systems), but for now let’s use the bootc-image-builder tool to generate a disk image.

Adding bootc-image-builder to Red Hat Satellite

bootc-image-builder is a containerised tool that we use to build disk images in formats such as qcow2, vmdk (VMware), ami (AWS) and ISO. As we’re using Satellite, we begin by adding the official bootc-image-builder image to Red Hat Satellite and synchronising it. As before, we can do this in the UI or use Ansible. Here’s the Ansible code to achieve this:

---

- name: Configure bootc product and bootc-image-builder repository

hosts: all

connection: local

gather_facts: no

collections:

- redhat.satellite

vars:

satellite_admin_user: admin

satellite_admin_password: !vault |

$ANSIBLE_VAULT;1.2;AES256;

3763656136XXXX

my_satellite_product:

- name: rhel-bootc

label: rhel-bootc

description: RHEL BootC

organization: Example Organization

my_satellite_repository:

- name: rhel9/bootc-image-builder

label: rhel9_bootc-image-builder

description: RHEL BootC

organization: Example Organization

product: rhel-bootc

content_type: docker

url: "https://registry.redhat.io"

docker_upstream_name: rhel9/bootc-image-builder

unprotected: true

upstream_username: username

upstream_password: !vault |

$ANSIBLE_VAULT;1.2;AES256;

37636XXXX

download_policy: on_demand

mirroring_policy: mirror_content_only

validate_certs: true

exclude_tags: "*-source"

tasks:

- name: Check satellite_admin_password is provided

fail:

msg: satellite_admin_password needs to be supplied

when:

- "satellite_admin_password is not defined"

- name: Set Satellite URL

set_fact:

satellite_url: "https://{{ inventory_hostname }}"

- name: "Configure Products"

redhat.satellite.product:

username: "{{ satellite_admin_user }}"

password: "{{ satellite_admin_password }}"

server_url: "{{ satellite_url }}"

organization: "{{ product.organization }}"

name: "{{ product.name }}"

description: "{{ product.description }}"

label: "{{ product.label }}"

when: product is defined

loop: "{{ my_satellite_product }}"

loop_control:

loop_var: product

- name: "Configure Repositories"

redhat.satellite.repository:

username: "{{ satellite_admin_user }}"

password: "{{ satellite_admin_password }}"

server_url: "{{ satellite_url }}"

organization: "{{ repository.organization }}"

name: "{{ repository.name }}"

description: "{{ repository.description }}"

label: "{{ repository.label }}"

product: "{{ repository.product }}"

content_type: "{{ repository.content_type }}"

url: "{{ repository.url }}"

docker_upstream_name: "{{ repository.docker_upstream_name }}"

unprotected: "{{ repository.unprotected }}"

upstream_username: "{{ repository.upstream_username }}"

upstream_password: "{{ repository.upstream_password }}"

download_policy: "{{ repository.download_policy }}"

validate_certs: "{{ repository.validate_certs }}"

mirroring_policy: "{{ repository.mirroring_policy }}"

include_tags: "{{ repository.include_tags | default(omit) }}"

exclude_tags: "{{ repository.exclude_tags }}"

when: repository is defined

loop: "{{ my_satellite_repository }}"

loop_control:

loop_var: repository

- name: "Sync Repositories"

redhat.satellite.repository_sync:

username: "{{ satellite_admin_user }}"

password: "{{ satellite_admin_password }}"

server_url: "{{ satellite_url }}"

organization: "{{ repository.organization }}"

product: "{{ repository.product }}"

repository: "{{ repository.name }}"

when: repository is defined

loop: "{{ my_satellite_repository }}"

loop_control:

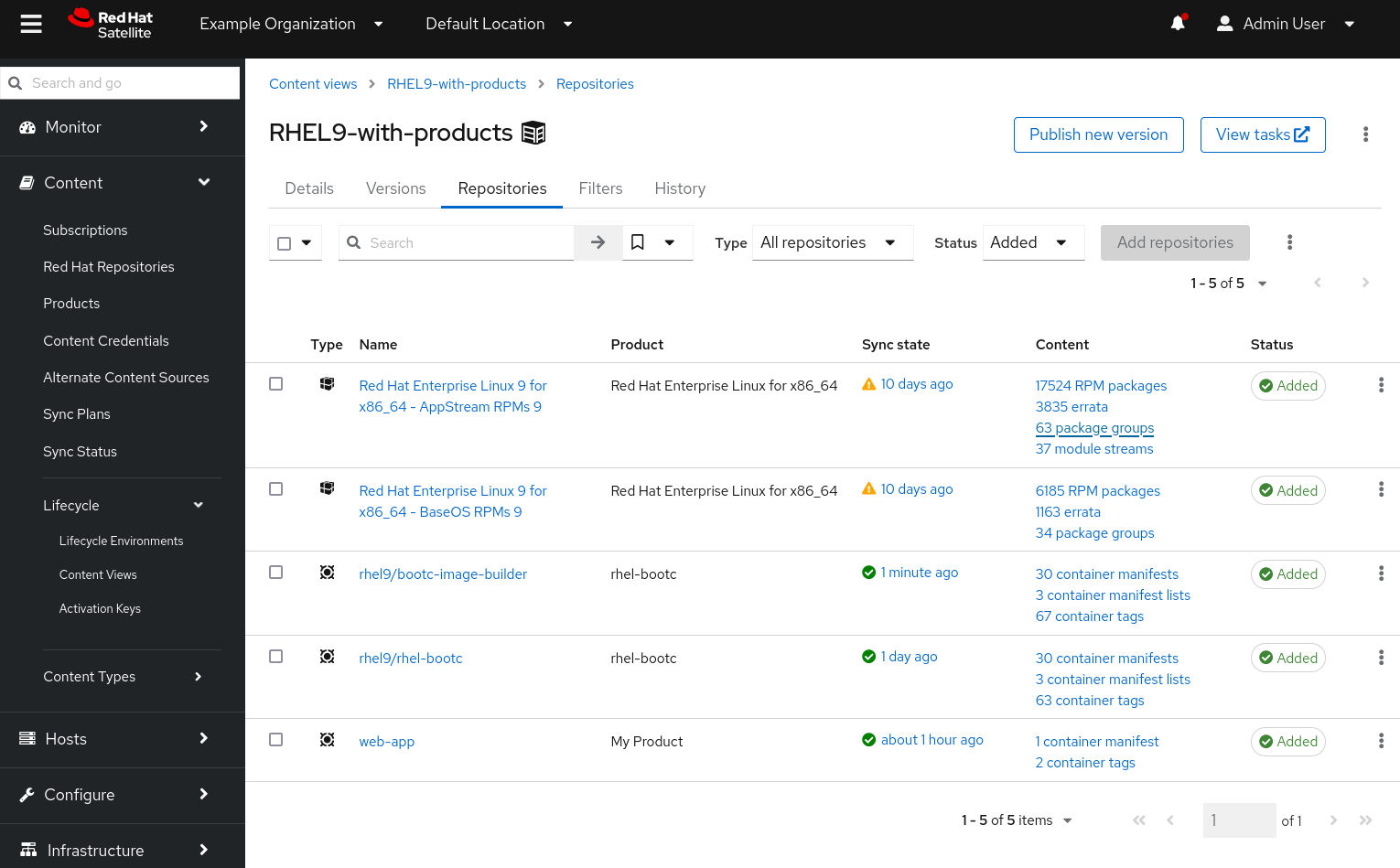

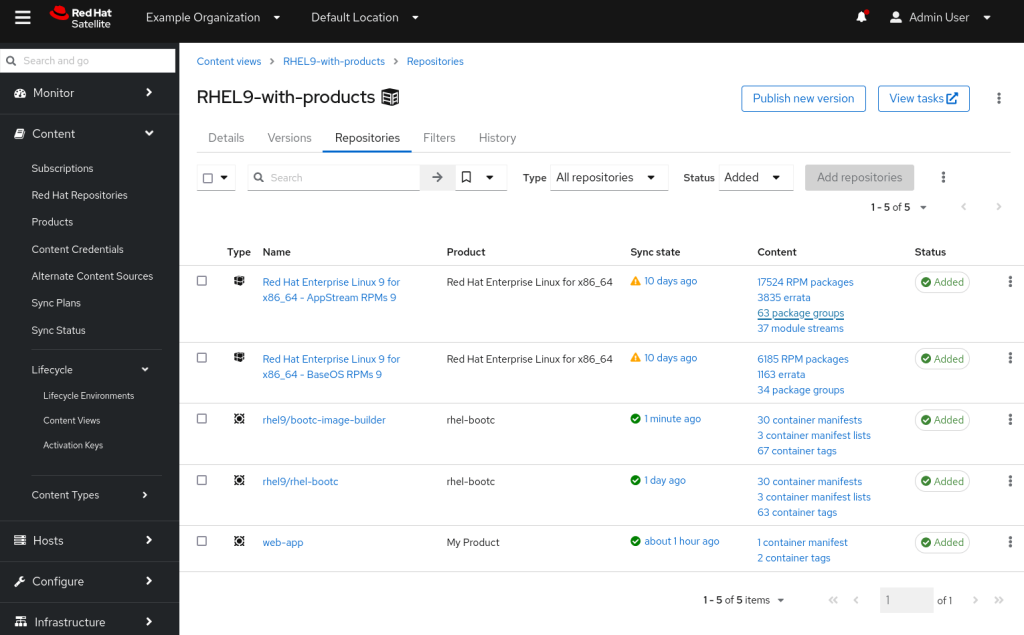

loop_var: repositoryOnce synchronised, we can add this to our content view and publish it:

With the new version of the content view published and promoted to our dev-test lifecycle environment, we can see the bootc-image-builder image available on our build server:

[devuser@buildserver ~]$ podman search satellite.london.example.com/ | grep builder

satellite.london.example.com/example_organization-dev-test-rhel9-with-products-rhel-bootc-rhel9_bootc-image-builder

A QCOW2 build script

As the user ‘devuser’ on our build server, we’ll create the following build_qcow.sh shell script:

#!/bin/bash

podman run \

--rm \

-it \

--privileged \

--pull=newer \

--security-opt label=type:unconfined_t \

-v /var/lib/containers/storage:/var/lib/containers/storage \

-v ./config.toml:/config.toml \

-v ./output:/output \

satellite.london.example.com/example_organization-dev-test-rhel9-with-products-rhel-bootc-rhel9_bootc-image-builder:latest \

--tls-verify=false \

--type qcow2 \

--config /config.toml \

satellite.london.example.com/example_organization-dev-test-rhel9-with-products-my-product-web-app

This is a script we can use to supply bootc-image-builder with the options we need to generate our custom QCOW2 image. We could add some extra variables to the script if we wish to pass different parameters such as different output formats or images to make this more flexible.

Hint: there is an option to bootc-image-builder called --local. You would use that option if you had pulled down your image and stored it locally. Note that if you accidentally use --local and specify a remote image, you’ll get a confusing message such as:

Error: cannot build manifest: cannot get container size: failed inspect image: exit status 125, stderr:

time="2024-05-30T12:56:34Z" level=error msg="Refreshing container 4b19b9e08b446355a7d640d8f1210b23735f49383503f293f2592a0d666638cd: acquiring lock 0 for container 4b19b9e08b446355a7d640d8f1210b23735f49383503f293f2592a0d666638cd: file exists"

time="2024-05-30T12:56:34Z" level=error msg="Refreshing volume 9208e1c32b2ba2759221c11520e54d5bfa79ac35c719e9d927cc8944ae5167cb: acquiring lock 1 for volume 9208e1c32b2ba2759221c11520e54d5bfa79ac35c719e9d927cc8944ae5167cb: file exists"

time="2024-05-30T12:56:34Z" level=error msg="Refreshing volume ce89fcfb2288ddcb6c2ee7690c51c2c863665583d63c69b57db90753244f093c: acquiring lock 2 for volume ce89fcfb2288ddcb6c2ee7690c51c2c863665583d63c69b57db90753244f093c: file exists"

Error: satellite.london.example.com/example_organization-dev-test-rhel9-with-products-my-product-web-app: image not known

The solution is NOT to use the --local flag if you using a remote registry.

Notice that we call a config file called config.toml in the script. This is a customisation file – here is a sample file that we’ll use in our demo:

[[blueprint.customizations.user]]

name = "ansible"

password = "something"

key = "ssh-rsa xxxx user@example.com"

groups = ["wheel"]Here we create an ansible user which is in the privileged wheel group and provide it with a username and password.

Build the image

We create the output directory and then attempt to run build_qcow.sh script:

[devuser@buildserver ~]$ mkdir output

[devuser@buildserver ~]$ ./build_qcow.sh

Error: cannot validate the setup: this command must be run in rootful (not rootless) podman

2024/05/30 10:44:04 error: cannot validate the setup: this command must be run in rootful (not rootless) podmanUnfortunately, we see that we need to run this in rootful podman. Let’s run the same command with sudo (to reduce space some of the output is truncated below):

[devuser@buildserver ~]$ sudo ./build_qcow.sh

[sudo] password for devuser:

Trying to pull satellite.london.example.com/example_organization-dev-test-rhel9-with-products-rhel-bootc-rhel9_bootc-image-builder:latest...

Getting image source signatures

Copying blob 57eed2957185 done |

Copying blob 6b20e0c6c1e1 done |

Copying blob 0419460afd52 done |

Copying config 055045daef done |

Writing manifest to image destination

Generating manifest manifest-qcow2.json

DONE

Building manifest-qcow2.json

starting -Pipeline source org.osbuild.containers-storage: 2e7448c0eec6ef342dcab5f929a56177193067b84de8471e683e8e540c7b6fed

Build

root: <host>

Pipeline build: 228e974f3c469a30f7e94eec6c1e8cbf35e91343664be5dcb4c75a3bcf58329e

Build

root: <host>

runner: org.osbuild.rhel82 (org.osbuild.rhel82)

org.osbuild.container-deploy: e1f777e86d6ec0a729c206dc8decf09eab851c71d78a8946835c17447ca85997 {}

Getting image source signatures

Copying blob sha256:a0f3852853f38cc684deb805539a19625bd607f14125e5cd29af126437559ce7

....

Writing manifest to image destination

}

⏱ Duration: 0s

org.osbuild.mkfs.fat: e123931b1df3eb7c7f2fe73705e8d831e8904e9344c6f7688c5aba3875f67dee {

"volid": "7B7795E7"

}

device/device (org.osbuild.loopback): loop0 acquired (locked: True)

mkfs.fat 4.2 (2021-01-31)

⏱ Duration: 0s

org.osbuild.mkfs.ext4: 91d3e83a89f7fc33c771f7a74f20ef2af360b24157fb817dbd18819562cc17b8 {

"uuid": "fb6ed1a0-a2e4-49fb-a099-00c940bf256b",

"label": "boot"

}

device/device (org.osbuild.loopback): loop0 acquired (locked: True)

mke2fs 1.46.5 (30-Dec-2021)

Discarding device blocks: done

Creating filesystem with 262144 4k blocks and 65536 inodes

Filesystem UUID: fb6ed1a0-a2e4-49fb-a099-00c940bf256b

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

⏱ Duration: 0s

org.osbuild.mkfs.xfs: c35dfa80bb2c7a241d5443ddb647fbddb0c0e844d90ca6898f11f6f01aa70c70 {

"uuid": "774cfb96-88ac-4e88-8063-de7b94bd223e",

"label": "root"

}

device/device (org.osbuild.loopback): loop0 acquired (locked: True)

meta-data=/dev/loop0 isize=512 agcount=4, agsize=557631 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=1 inobtcount=1 nrext64=0

data = bsize=4096 blocks=2230523, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=16384, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

⏱ Duration: 0s

org.osbuild.bootc.install-to-filesystem: 2bc3d583c25ec07907367f037ebd44c46d97e1fd8c0e61e237af83567dedf2f3 {

"kernel-args": [

"rw",

"console=tty0",

"console=ttyS0"

],

"target-imgref": "satellite.london.example.com/example_organization-dev-test-rhel9-with-products-my-product-web-app"

}

device/disk (org.osbuild.loopback): loop0 acquired (locked: False)

mount/part4 (org.osbuild.xfs): mounting /dev/loop0p4 -> /store/tmp/buildroot-tmp-0ah5dw_c/mounts/

mount/part3 (org.osbuild.ext4): mounting /dev/loop0p3 -> /store/tmp/buildroot-tmp-0ah5dw_c/mounts/boot

mount/part2 (org.osbuild.fat): mounting /dev/loop0p2 -> /store/tmp/buildroot-tmp-0ah5dw_c/mounts/boot/efi

Host kernel does not have SELinux support, but target enables it by default; this is less well tested. See https://github.com/containers/bootc/issues/419

Installing image: docker://satellite.london.example.com/example_organization-dev-test-rhel9-with-products-my-product-web-app

Initializing ostree layout

Initializing sysroot

ostree/deploy/default initialized as OSTree stateroot

Deploying container image

Loading usr/lib/ostree/prepare-root.conf

Deployment complete

Running bootupctl to install bootloader

Installed: grub.cfg

Installed: "redhat/grub.cfg"

Trimming boot

/run/osbuild/mounts/boot: 911.3 MiB (955527168 bytes) trimmed

Finalizing filesystem boot

Trimming mounts

/run/osbuild/mounts: 6.7 GiB (7233822720 bytes) trimmed

Finalizing filesystem mounts

Installation complete!

mount/part2 (org.osbuild.fat): umount: /store/tmp/buildroot-tmp-0ah5dw_c/mounts/boot/efi unmounted

mount/part3 (org.osbuild.ext4): umount: /store/tmp/buildroot-tmp-0ah5dw_c/mounts/boot unmounted

mount/part4 (org.osbuild.xfs): umount: /store/tmp/buildroot-tmp-0ah5dw_c/mounts/ unmounted

⏱ Duration: 32s

org.osbuild.fstab: d92d97a7e5ba56c17fee45ad1a143e2bc471c5c0728e4781055500e2a11fde06 {

"filesystems": [

{

"uuid": "774cfb96-88ac-4e88-8063-de7b94bd223e",

"vfs_type": "xfs",

"path": "/",

"options": "ro",

"freq": 1,

"passno": 1

},

{

"uuid": "fb6ed1a0-a2e4-49fb-a099-00c940bf256b",

"vfs_type": "ext4",

"path": "/boot",

"options": "ro",

"freq": 1,

"passno": 2

},

{

"uuid": "7B77-95E7",

"vfs_type": "vfat",

"path": "/boot/efi",

"options": "umask=0077,shortname=winnt",

"passno": 2

}

]

}

mount/part4 (org.osbuild.xfs): umount: /store/tmp/buildroot-tmp-cn4tdvch/mounts/ unmounted

⏱ Duration: 7s

Pipeline qcow2: 78180f601aba1fea51aada47c0d254380d70167be7f6aea96515015cd6d2bbec

Build

root: <host>

runner: org.osbuild.rhel82 (org.osbuild.rhel82)

org.osbuild.qemu: 78180f601aba1fea51aada47c0d254380d70167be7f6aea96515015cd6d2bbec {

"filename": "disk.qcow2",

"format": {

"type": "qcow2",

"compat": ""

}

}

⏱ Duration: 41s

manifest - finished successfully

build: 228e974f3c469a30f7e94eec6c1e8cbf35e91343664be5dcb4c75a3bcf58329e

image: e9b7a8b03654652169a80e928719d8979e004b69a7447243766739b2bdaaa1c7

qcow2: 78180f601aba1fea51aada47c0d254380d70167be7f6aea96515015cd6d2bbec

vmdk: 388300171ab507deaebc0e56d94611b3763ca0b46548742224d416577e2a5e41

ovf: 12bfab6bd2d93e5fc744584eea71e70ad67a4e6c3fbe59ca7d6e5d602bcd46e4

archive: 96fee5ccae13df7cdca148cb29776871fa38cc8c3811774f86b00fff12e7f2c3

Build complete!

Results saved in

.After around a minute or the script completes and we get a QCOW image and a summary json file in the output directory:

[devuser@buildserver ~]$ ls -l output/manifest-qcow2.json output/qcow2/disk.qcow2

-rw-r--r--. 1 root root 16445 May 30 13:41 output/manifest-qcow2.json

-rw-r--r--. 1 root root 1032454144 May 30 13:43 output/qcow2/disk.qcow2One Disk Image or Many?

Notice that using the config.toml file, we created a disk image with a custom user account. If we wish to deploy into development, test, stage, production and QA environments we can use the same disk image. This means that the same custom user account will deploy onto all environments. This may or may not be desirable.

The great thing about the customisation file is that you could have different files for different environments. This means that:

- You can create custom disk images for each environment

- You can have custom accounts on each environment

- All custom disk images are generated from the same RHEL application image, so everything is consistent aside from what’s in the customisation file

As with many sysadmin tasks, there are different ways to approach this. You might decide to add common accounts (eg for monitoring or automation) in your RHEL application image and then bespoke accounts for each environment are in customisation files. Or you might decide all accounts can go in the base image and you don’t require customisation.

Disk Image Updates

When we build servers from this disk image, it’s worth noting that by by default bootc will look for updates from the same container image registry and tag used in the disk image creation. In our case, we built from the “Dev-Test” Lifecycle environment of Satellite so that will be the source of updates. In the next post – Creating Image Mode for RHEL Servers – we build virtual machines from this single disk image and then describe how to switch each of them get updates from our Stage and Prod-DR Lifecycle environments.

Summary

We synchronised bootc-image-builder to our Red Hat Satellite server and made it available to the correct Lifecycle environment. We created a customisation file and wrapper script around bootc-image-builder to generate customised disk files based on our custom application image. A custom QCOW2 disk image was successfully created. This disk image can now be used to create new servers:

Note

This post is not endorsed or affiliated with Red Hat – the information provided is based on experience, documentation and publicly available information. Feel free to leave feedback at the end of this page if anything needs correction.

For an up to date roadmap discussion on Image Mode for RHEL please contact your Red Hat Account rep.